Researchers at Google have released a novel language model called 123B. This massive model is developed on a dataset of remarkable size, containing written data from a diverse range of sources. The aim of this research is to investigate the capabilities of scaling language models to massive sizes and illustrate the advantages that can occur from such an approach. The 123B model has already displayed outstanding performance on a variety of tasks, including text generation.

Furthermore, the researchers performed a thorough evaluation to explore the correlation between the size of the language model and its performance. Their findings suggest a strong correlation between model size and performance, supporting the hypothesis that scaling language models can lead to remarkable improvements in their skills.

Exploring the Potential of 123B

The novel large language model, 123B, has gained significant interest within the AI community. This impressive model is known for its vast knowledge base, exhibiting a remarkable ability to produce human-quality text.

From completing assignments to participating in stimulating discussions, 123B exhibits what it's capable of. Researchers are continuously exploring the extents of this exceptional model, uncovering new and creative applications in domains such as education.

123B: A Benchmark for Large Language Models

The field of large language models (LLMs) is experiencing a surge at an remarkable pace. To thoroughly measure the performance of these sophisticated models, a standardized benchmark is crucial. Enter 123B, a comprehensive benchmark designed to test the mettle of LLMs.

To be more precise, 123B comprises a varied set of benchmarks that encompass a wide variety of textual abilities. From text generation, 123B seeks to provide a objective measure of an LLM's expertise.

Moreover, the open-source nature of 123B stimulates development within the machine learning field. This unified framework facilitates the advancement of LLMs and drives innovation in the field of artificial intelligence.

The Impact of Scale on Language Understanding: Insights from 123B

The domain of natural language processing (NLP) has witnessed remarkable progress in recent years, driven largely by the increasing size of language models. A prime instance is the 123B parameter model, which has shown exceptional capabilities in a spectrum of NLP tasks. This article investigates the influence of scale on language interpretation, drawing insights from the success of 123B.

Specifically, we will evaluate how increasing the quantity of parameters in a language model affects its ability to encode linguistic nuances. We will also 123B explore the trade-offs associated with scale, including the hindrances of training and utilizing large models.

- Furthermore, we will underscore the opportunities that scale presents for future advances in NLP, such as generating more natural text and carrying out complex inference tasks.

Concurrently, this article aims to offer a thorough grasp of the crucial role that scale plays in shaping the future of language understanding.

The Rise of 123B and its Impact on Text Generation

The release of the 123 Billion parameter language model, 123B, has sent waves through the AI community. This groundbreaking achievement in natural language processing (NLP) demonstrates the unprecedented progress being made in generating human-quality text. With its ability to comprehend complex language, 123B has opened up a treasure trove of possibilities for uses ranging from content creation to interactive dialogue.

As engineers continue to explore into the capabilities of 123B, we can foresee even more groundbreaking developments in the field of AI-generated text. This model has the potential to disrupt industries by streamlining tasks that were once limited to human creativity.

- However, it is essential to consider the ethical implications of such advanced technology.

- The thoughtful development and deployment of AI-generated text are essential to ensure that it is used for constructive purposes.

In conclusion, 123B represents a major milestone in the advancement of AI. As we embark into this new territory, it is essential to engage with the future of AI-generated text with both enthusiasm and responsibility.

Unveiling the Inner Workings of 123B

The 123B language model, a colossal neural network boasting trillions of parameters, has captured the imagination of researchers and developers alike. This monumental achievement in artificial intelligence offers a glimpse into the potential of machine learning. To truly grasp 123B's impact, we must dive into its intricate inner workings.

- Examining the model's design provides key knowledge into how it processes information.

- Understanding its training data, a vast archive of text and code, sheds light on the influences shaping its outputs.

- Revealing the algorithms that drive 123B's learning mechanisms allows us to influence its actions.

{Ultimately,this a comprehensive analysis of 123B not only broadens our knowledge of this remarkable AI, but also opens doors for its sustainable development and utilization in the future society.

Ralph Macchio Then & Now!

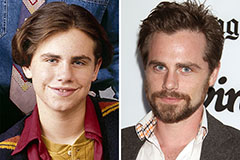

Ralph Macchio Then & Now! Rider Strong Then & Now!

Rider Strong Then & Now! Michael Oliver Then & Now!

Michael Oliver Then & Now! Alexa Vega Then & Now!

Alexa Vega Then & Now! Shannon Elizabeth Then & Now!

Shannon Elizabeth Then & Now!